Pictures of your face are probably online, right? But what if we told you that the vast majority of your pictures are actually invisible to you, made by machines, for other machines to see. That is the reality that artist Trevor Paglen is investigating in his current exhibition, "A Study of Invisible Images" at Metro Pictures, on view until October 21st. His new works reveal not only a new type of image made by artificial intelligence, but also a new structure of power asserted by those that benefit from these "invisible" images. Here, Artspace's Loney Abrams speaks with the artist about how autonomous object recognition services can track people even when they think they're offline, about how art history needs to make way for vision that doesn't require human eyes, and about the dangerous biases programmed into artificial intelligence.

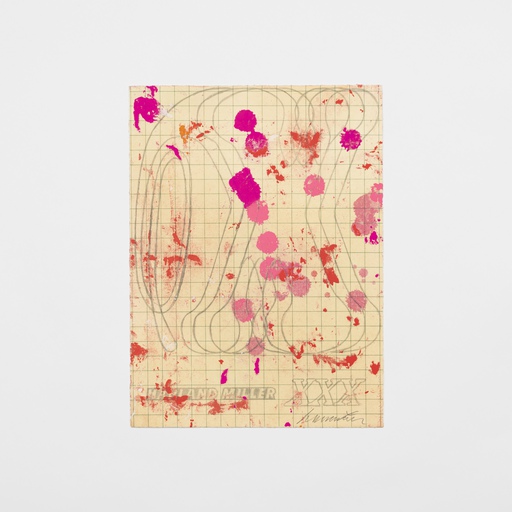

Trevor Paglen, A Man (Corpus: The Humans) (2017). All images courtesy of Metro Pictures and the artist.

Trevor Paglen, A Man (Corpus: The Humans) (2017). All images courtesy of Metro Pictures and the artist.

For this new body of work that you’re showing at Metro Pictures you've coined the term “invisible images.” What does that term mean to you?

We’ve arrived at a moment in history where images have undergone a huge transformation. The majority of images in the world are now made by machines for other machines, and it's pretty rare that humans are in the loop. We’ve traditionally thought about images and the role they play in society as being centered on a human observer—a human looking at an image. But we're now at a point where most of the images in the world are invisible. A simple example is a self-driving car that is making tons and tons of images every second to navigate. They're not making those images for humans, they're making them for themselves. So that's one end of the spectrum.

On the other end of the spectrum you have something like Facebook. When you put pictures on Facebook, your experience involves sharing images with your friends, but what's going on behind the scenes is that those images are being analyzed by very powerful artificial intelligence algorithms and are scrutinized much more closely than your friends would scrutinize them, for example. So what I'm talking about when I say “invisible images” is the advent of autonomous seeing machines that don't necessarily involve humans, and that's the majority of the types of seeing that's going on in the world now.

Can you give me a few more examples of autonomous seeing machines?

Typically downtown, police cars will drive around and take pictures of every single person's license plate. That information will automatically be read and stored, and it will create a record of where a particular person's license plate was at a particular point in time. So that's an example in policing. Another example is in manufacturing and quality assurance. It used to be that a product would go down an assembly line and a person would pick it up and see if it was properly manufactured; we don't do that anymore. You just take a picture of every single thing and an algorithm will analyze if it was properly fabricated or not. So it's found in many, many different domains.

All of these examples are machine to machine—but obviously there's a person somewhere, or more likely a corporation somewhere, that is benefiting from this kind of machine vision. So who is benefiting from this, who owns this data, and what kinds of power structures are being shifted because of this new way of seeing?

In general, who's benefiting is capital: big corporations, the military, and the police. Those are the interests that are generally served by autonomous vision systems. That's true of police taking pictures of license plates, or body cams doing face recognition of the people that police encounter. That's true of autonomous drones and that's true of Facebook trying to figure out whether you like drinking Coke better than Pepsi by looking at images of you drinking.

It’s easy to get worked up about the ethical implications of this kind of surveillance. But ultimately, should I be fearful? What’s at stake here?

First of all, as a white person, these systems are built to benefit you. There are definitely winners and losers in these kinds of systems. In terms of whether you should worry about it or not—maybe you shouldn't worry about it. To me, that’s not the question. There is a kind of art historical and philosophical question: what is an image if you don't need humans anymore? And there's a series of ethical and political questions too that arise from the fact that forms of seeing are also always forms of power. And so I guess the proposal is, how do we wrap our heads around that and not dismiss it so easily?

The Trump administration ordered the web hosting company DreamHost to turn over data about the visitors of a website that was used to help organize Trump inauguration protests. I imagine that facial recognition software as well as GPS phone data could also help the government determine who exactly is at protests and political rallies. How might these invisible images play a role in politics?

They could play a big role. Your phone creates a record of every place that you've been and that record is subpoenable. And often times they don't need a warrant to get that information, so it’s very easy for them to get a record of every single person that was at a protest. That's something that AT&T can easily provide. But it's not only who's at a protest. Did you spend two hours at a bar and then drive home? AT&T knows that. So, does AT&T create a service that allows them to look at those kinds of meta-data signatures and automatically send law enforcement an alert when that happens? That can be done yesterday. That's not a hypothetical question. That could easily exist.

In an essay you wrote for The New Inquiry you explain that one of the major differences between digital images and analog images is that digital images are actually just data that only becomes visible when we have software to interpret it (like iPhoto for example), and a display (like a phone screen.) And even then, the image is only temporary; it exists only when the app is open and the screen is on. The rest of the time, the image exists as data that only machines can understand.

That’s right.

Can you walk me through this machine-driven process that you've now tapped into? How have you hijacked these machine to machine systems to produce your work?

With this body of work there are three kinds images that I'm interested in. The first is what we would call a training image. These are images that humans create in order to teach machines how to see. For example, if you want to train a computer how to read handwritten numbers you create a large database of handwritten “1”s, handwritten “2”s, handwritten “3”s, et cetera. Then the machine will learn how to distinguish from one kind of number to the next. There are more advanced versions of that where you train it to detect a pedestrian, or face detection. When you use your phone and see a box around someone’s face, it’s been trained to know what that face looks like. So the images that are used to train those forms of computer vision are one section that I’m interested in. And I think about it as ultra structuralist photography in that it’s photos that humans make for machines—photography with an audience that is not human.

The second kind of images would be images that show you how machines read a landscape or read a face or something. What does a self driving car actually see when it's looking at the street? So over the past couple of years in the studio we've built a programming language that allows us to take a photograph or video and to see it through an algorithm that you would use for a guided missile, for example. I think about these as machine-readable landscapes in a way. And that’s what some of the images are in the exhibition.

Then finally, the third kind of image are images that machines make for themselves to understand other images. An example of that would be the portrait of Frantz Fanon in the exhibition. Facebook has the best facial recognition software in the world. It's much better than human eyes. It’s like 99 percent accurate or something. If it saw a picture of you as a baby it would know that it was you. Very generally speaking, the way that a lot of facial recognition algorithms work is by taking all of the pictures of you that you’ve uploaded or other people have tagged with your name, and it makes a composite face out of all of them. Then it will take a composite picture of every other human in the world to make a composite face of "the humans." It will take your composite face, subtract "the humans" from you, so that it isolates the features that are unique to your face. In other words, it determines what's different from your face and every other face of humans in the world. That's how they recognize you. And that's what the portrait of Fanon is. It’s part of this series of portraits of people as seen through facial recognition systems that have been trained to recognize them. It’s native form is to be indivisible to humans. It's not made by humans and it's not for humans. You can turn it into something that humans can see but that's not the point of it.

Trevor Paglen, Fanon (Even the Dead Are Not Safe) (2017)

Trevor Paglen, Fanon (Even the Dead Are Not Safe) (2017)

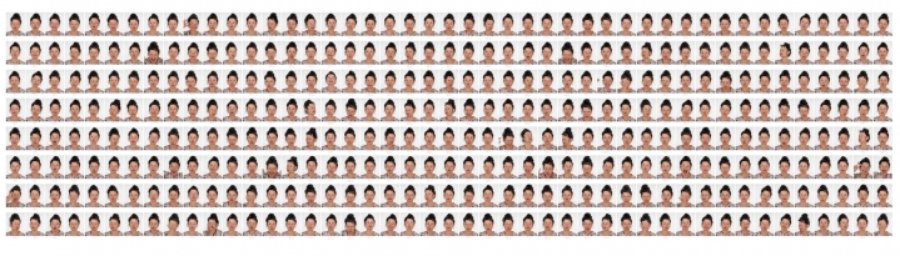

The weird surrealistic-looking works in the back of the exhibition are playing with the kinds of assumptions that tend to be built into object recognition technologies and artificial intelligence technologies. And the piece with all the portraits of Hito Steyerl is about that as well. What is going on there is a very standard use of AI is to create autonomous systems that can recognize objects. For example, you can build an AI that can recognize everything that's in kitchens. We give it a thousand pictures of a fork, a thousand pictures of a cup, a thousand of pictures of an oven, and it learns how to recognize all these things. Then you attach a web cam to it and point it around the kitchen and it will say, “that's a plate, that's a spoon,” et cetera. A much more advanced version of that will be used by a Facebook to try to recognize the kinds of clothes you're wearing, what kinds of products surround you in photographs, and that sort of thing.

So, when you build these kinds of object recognition networks that can do that, you have to train them, and you use training images to do that. It can always only recognize a finite number of things, and you have to predetermine what it recognizes. It will only ever see things that it's been trained to see. So I built AIs but instead of using a taxonomy like things that are in kitchen, or things like Coke and Pepsi, I'm creating taxonomies from literature or philosophy or folklore, and I'm using the kind of taxonomy itself as a kind of meta commentary. For example, I built a neural network I call “Omens and Portents” that is an AI that can only see eclipses, comets, black cats, rainbows, and other things that historically have been images of impending doom. So now you have an AI and you set it up and plug it into your web cam and point it around your kitchen and it sees the fork as a comet and the spoon as an eclipse. There's one that's called “American Predators” and it’s an AI that sees carnivorous animals and plants, predator drones, and stealth bombers. There's an AI that's called “Monsters of Capitalism” that can only see monsters that historically have been used as metaphors for capitalism. So Marx talks about capitalism as a vampire that sucks the blood of the working class to feed its undead, immortal self or something like that. Or, the original zombies come from Haiti, and they were the sugar plantation worker whose soul had been stolen and they can only mindlessly work the plantations. The Frankenstein is a kind of allegory for industrialization.

So once we built these AIs that concede these different taxonomies we tell it to synthesize an image. The images that they've synthesized or "hallucinated" are the images in the show.

Trevor Paglen, Comet (Corpus: Omens and Portents) (2017)

Trevor Paglen, Comet (Corpus: Omens and Portents) (2017)

When I first saw those images they were on my computer—little jpegs on my screen. From that vantage point they almost looked like surrealist or abstract paintings, and that maybe the pixelation was due to the jpeg being compressed or poor image resolution. But when you see them in person you realize that every single thing about thes images are digital.

It's completely synthetic. It is not based on any image on the world.

Yet it’s very painterly. It’s the most “arty” of your work.

Totally.

It's so easy to think of these data sets we're talking about as totally objective visual definitions; that since their only purpose is to detect and recognize, the images that they see or make are non-judgmental. But your artful and poetic hallucination images make it clear that these AIs aren’t objective at all. Someone is training these AIs, and depending on what biases the trainers have (for example, in how they might define a "predator"), the AI has the ability to make very impactful "decisions" based on those biased definitions. There’s a bias at work here.

That's exactly right. And that's what that Hito Steyerl piece is about too. There's one AI that’s trying to detect her gender and it's decided that she's 67 percent female and 33 percent male. Somebody decided that some image is 100 percent female and some other image is 100 percent male. So there's always these biases that are built into these systems. In order to show that there’s nothing objective about this, let’s build these systems that are preposterously not objective, but are still using categories that historically humans have organized information into. In other words, “Omens and portents” are real categories that humans have used to make meaning out of images. So the work is really playing with that a lot and thinking about the relationship between meaning and images and power.

Trevor Paglen, Machine Readable Hito (2017).

Trevor Paglen, Machine Readable Hito (2017).

For these works in particular you've coined this term “invisible images,” but you’ve always made invisible images, in a way. You’ve taken aerial footage of NSA headquarters, or very, very long-distance photographs of top-secret military bases. Of course, these physical places are not invisible, but their representation is almost non-existent in the public sphere. Maybe they aren’t invisible but they are non-visible to most people. I'm wondering how your older series led into this one, and how you first began investigating AI and machine vision.

For me it's very natural in the sense that I was looking at spy satellites and drones, and how they communicate. There's communication architecture like underwater cables. And what's inside the cables? Well what's inside the cables are these invisible images! So for me it's very seamless from one project to another. Although they look very different they're all part of these bigger infrastructures that I've been looking at for a long time. And the images themselves are parts of the infrastructure.

The major difference is that in one case you’re actually making the images and now your leaving it up to the machines.

But I'm creating the conditions that the machine is going to use to make the images.

And in the end you reveal something that is unintelligible to us without the use of these representational images—again, whether we’re talking about this work or your older work. So, what do you hope these images reveal to the people who come see this show?

I think there are two things that make this material interesting to me. One is just thinking about the history of images. Like, what are these kinds of images that get created now that are really, really different than the kinds of images that were made in the mid-20th century and in the Renaissance and going back to cave paintings? It’s almost like a study of perspective. For me, the rise of this automated vision is actually a bigger deal than the invention of perspective. So just thinking formally and art historically, what are these new kinds of images? That's one question.

And then the other set of questions are about what kinds of power are flowing through these images. So for example in the Hito piece, what is the power to say this is female and this is male, or this is somebody’s age, et cetera? Who is making those judgments and what criteria are these judgments being made on?

To wrap up, the show at Metro Pictures is up until October 21. Is there anything else on the horizon that you want to mention?

So much stuff! In the Spring I'm launching a satellite with SpaceX, and that was announced a week or two ago. It's being commissioned by the Nevada Art Museum and they're doing a Kickstarter to get a little help with funding. There's also a big mid-career survey happening at the Smithsonian next summer. There's much more but I guess that's enough for now.